Latest Developments  App Store for GPTs

App Store for GPTs

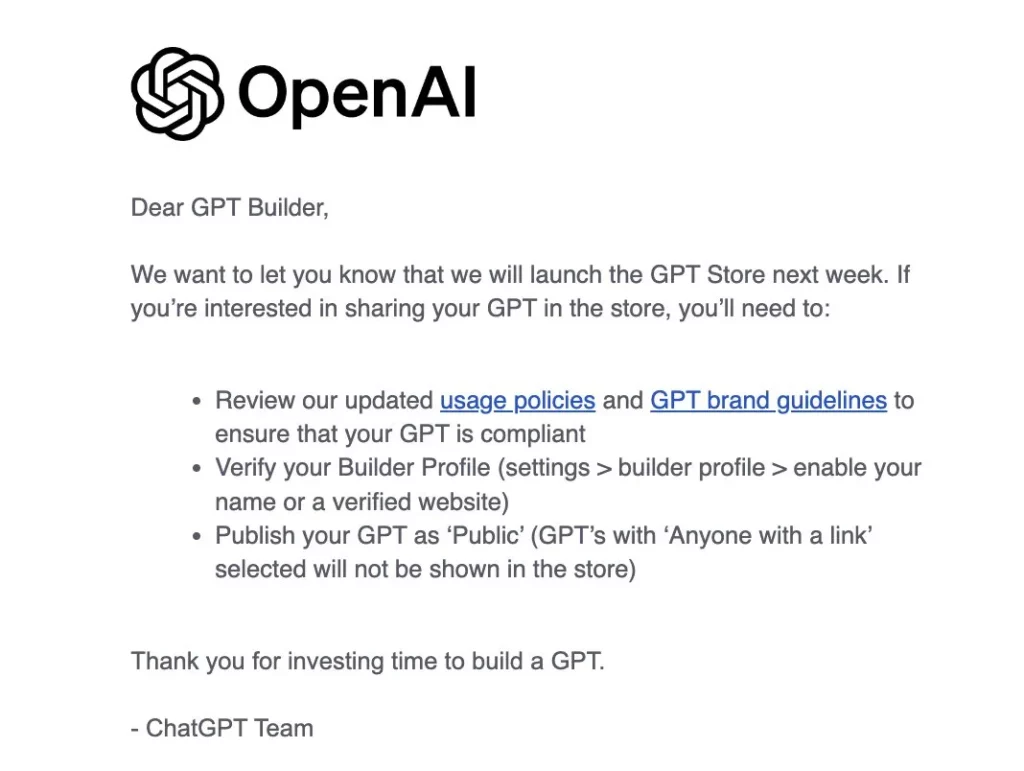

OpenAI launched GPTs on their Developer Day, where people can create their own custom GPTs on their own data. Soon after, the internet was flooded with thousands of GPTs built by individuals for various purposes.

OpenAI announced that they will introduce an App Store for GPTs, where creators can launch their own GPTs and earn real money. However, it is still unclear whether the GPT Store will come with any revenue-sharing system.

Full-body avatars with voice-driven expressions

Full-body portrait avatars, lively and realistic, created solely from voice! Meta and the University of California, Berkeley, have introduced a method to generate full-body portrait avatars that are dynamic and lifelike, responding to real gestures and expressions during conversations, using only spoken audio as input. It can synthesize detailed facial expressions, body gestures, and hand movements synchronized with the audio input, enhancing the authenticity and responsiveness of the digitized avatar.

Highlights:

– The research introduces a complex motion model with three components: a facial motion model, a pose mental state prediction, and a body motion model. It combines dynamic audio input and a conditional generative model for facial movements, and a creative process using vector quantization and conditional generative models for detailed body movements.

– The study emphasizes the crucial role of lifelike representation in creating avatars. It demonstrates that highly realistic avatars are essential for accurately depicting nuanced conversational gestures, with research indicating a clear preference for these avatars over grid-based ones. This underscores the limitations of textureless grids in capturing detailed gestures.

– The research is supported by a unique and detailed dataset of concurrent conversations, recorded in a multi-camera setup to allow accurate tracking and reconstruction of participants’ realistic 3D representations. Encompassing various topics and emotional expressions, this dataset provides a robust platform for developing and testing motion models.

Artistic Encouragement for Stimulating LLM Feedback

Stimulation techniques are often overlooked, yet they hold the key to unlocking the true potential of LLMs. Recognizing this, a recent study has introduced a comprehensive set of 26 guiding principles specifically designed to enhance the art of stimulating LLMs. And if you think these are just theoretical principles, applying them has led to an average 50% improvement in feedback quality across various LLMs.

Highlights:

–The study introduces 26 detailed principles for stimulation techniques, categorized into five different groups. These groups include the Structure and Clarity of Stimulation, Specificity and Information, and Complex Tasks and Stimulation Programming. These principles are designed to address a variety of user situations and interactions with LLMs, aiming to optimize the performance and relevance of model feedback.

Performance Evaluation of Principles on Different Scales of LLMs

The performance of these principles has been tested on different scales of LLMs, including small scale (7 billion parameters model), medium scale (13 billion), and large scale (70 billion, GPT-3.5/4). Two key metrics were used for evaluation: Boosting, measuring the improvement in feedback quality, and Correctness, focusing on the accuracy of feedback.

These principles resulted in performance improvements of 57.7% and 67.3% in quality and accuracy, respectively, for GPT-4. The transition from LLaMA-2-7B to GPT-4 surpassed a 40% performance improvement. In addition to enhancing the performance of artificial intelligence, these principles also significantly contribute to user understanding and interaction with LLMs. By providing clearer and more structured stimuli, users can gain a better understanding of the capabilities and limitations of these models.

Professional Tools

1.RAGatouille:

– A tool designed to integrate state-of-the-art (SOTA) retrieval methods into any RAG pipeline, with a focus on convenience and ease of use. It aims to bridge the gap between complex information retrieval research and practical applications in RAG pipelines.

2.Dashy:

– An all-in-one application consolidating tools, notifications, and data into a customizable dashboard, enhancing productivity and efficiency with over 40 specialized utilities for various professional and personal needs.

3.Echo AI:

– Your personal interview preparation and training assistant, helping improve interview skills by focusing on behavioral questions with over 50 practical questions. You can record your answers, convert them into text, and receive feedback and a score. It syncs with iCloud for smooth practice across multiple devices.

4.Wally:

– Powered by artificial intelligence, this tool allows users to create unique wallpapers that can be shared by selecting themes, styles, and colors, offering a variety of artistic options and an easy-to-use interface.