In recent years, organizations have invested heavily in improving what customers can see: sleek user interfaces, faster websites, better SEO rankings, and higher conversion rates. These efforts make sense. They drive growth, visibility, and short-term results.

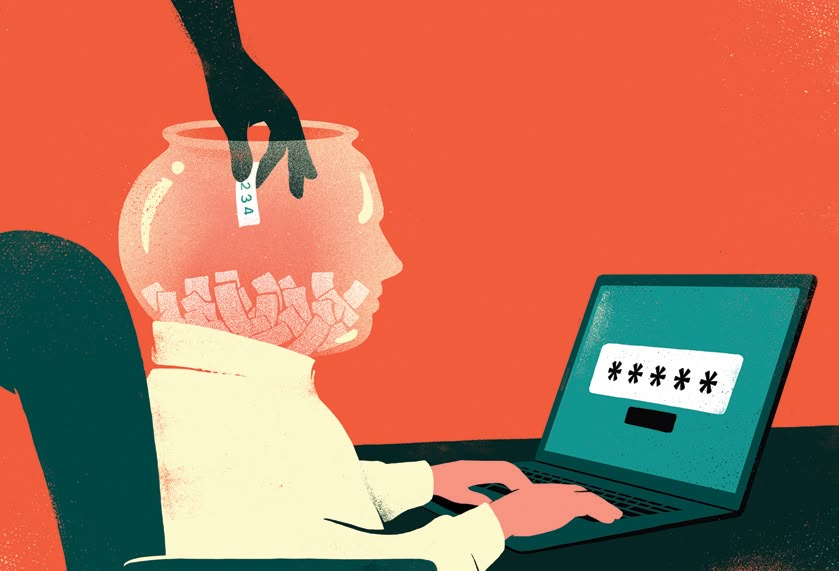

What often receives far less attention is what customers never see: how their data is stored, processed, protected, and governed behind the scenes. And yet, a single data breach can instantly erase years of trust, damage a brand’s reputation, and render every UX improvement meaningless.

As AI becomes embedded in daily operations, data is no longer just an asset. It is the foundation of decision-making, automation, and personalization. Protecting that foundation is no longer optional, especially in a globalized world where data flows across borders, cloud providers, and AI systems at unprecedented speed.

The Hidden Risk Behind “Growth-First” Digital Strategies

Many business leaders prioritize visible outcomes because they are easier to measure and justify. Conversion rates, traffic growth, and engagement metrics appear directly on dashboards. Security, by contrast, often feels abstract, until something goes wrong.

This mindset creates a dangerous imbalance. Organizations optimize front-end performance while assuming that cloud providers, third-party tools, or default configurations will “take care of security.” In practice, this assumption is one of the most common root causes of data incidents.

According to IBM’s Cost of a Data Breach Report 2023, the global average cost of a data breach reached USD 4.45 million, the highest recorded to date. Beyond financial loss, breaches lead to regulatory penalties, operational disruption, and long-term erosion of customer trust. For AI-driven systems, the consequences are even more severe: compromised data undermines model accuracy, governance, and credibility.

In other words, when data protection fails, everything built on top of it, UX, automation, AI insights, collapses with it.

Why AI Changes the Data Protection Equation

Traditional IT systems primarily store and retrieve data. AI systems actively learn from it. This distinction matters.

AI pipelines often involve:

- Aggregating data from multiple internal and external sources

- Processing sensitive behavioral, financial, or operational signals

- Generating predictions or recommendations that influence decisions

Each step introduces new attack surfaces and compliance risks. In a globalized environment, data may cross jurisdictions with different legal frameworks, such as GDPR, data localization laws, or public-sector confidentiality requirements.

For enterprises and government agencies, the question is no longer just “Is our data secure?” but also:

- Where is our data processed?

- Who has access to it — human and machine alike?

- Can we prove compliance, not just assume it?

Generic AI platforms often struggle to answer these questions clearly because they are designed for scale, not sovereignty.

The Illusion of Security in Generic AI Platforms

Many off-the-shelf AI solutions promise “enterprise-grade security,” but their architectures are rarely designed around the specific regulatory, operational, or sovereignty needs of individual organizations. Common challenges include:

- Limited control over where data is stored or processed

- Black-box model behavior with unclear data lineage

- Shared infrastructure that increases exposure risk

- Inflexible governance models that do not align with public-sector or regulated enterprise requirements

These limitations may be acceptable for experimentation. They are unacceptable for mission-critical systems handling sensitive data. This is where tailored AI frameworks become essential.

Tailored AI Frameworks: Security by Design, Not by Assumption

At DXTech, we approach AI security as an architectural discipline, not a compliance checkbox. Our AI frameworks are designed to adapt to the realities of government and enterprise environments, where data sovereignty, auditability, and control are non-negotiable.

A secure AI framework starts with clear boundaries:

- Data ownership remains with the organization at all times

- Processing environments are defined and governed, not abstracted away

- Access controls are explicit, role-based, and continuously monitored

By designing systems around these principles, security becomes embedded into workflows rather than enforced after deployment.

From Risk Mitigation to Competitive Advantage

Organizations that take data protection seriously often discover an unexpected benefit: trust scales faster than features. Customers are increasingly aware of data risks. Regulators are more proactive. Partners demand higher standards. In this environment, robust data protection becomes a differentiator, not a constraint.

AI systems built on secure, sovereign foundations are adopted more confidently, integrated more deeply, and sustained over time. They enable innovation without sacrificing control – a balance that defines mature AI adoption.

Building AI That Endures

At DXTech, our experience across government and enterprise projects has reinforced a simple truth: AI systems only succeed when the data beneath them is protected as carefully as the outcomes they promise.

Security is not a blocker to innovation. It is what allows innovation to endure in a complex, interconnected world. For organizations planning their next phase of AI investment, the most important question may not be how advanced the model is, but how well the data behind it is protected.

Because in the end, a single breach can undo years of growth, while a secure foundation turns AI into a capability you can trust, scale, and rely on.